Reconnaisance de visages à partir de modèles tridimensionnels

memoire » M.Sc.Etienne Beauchesne

Tags: couleur , ICP , illumination , modélisation 3D , reconnaissance de visages , texture

Date : 2002-12

Abstract

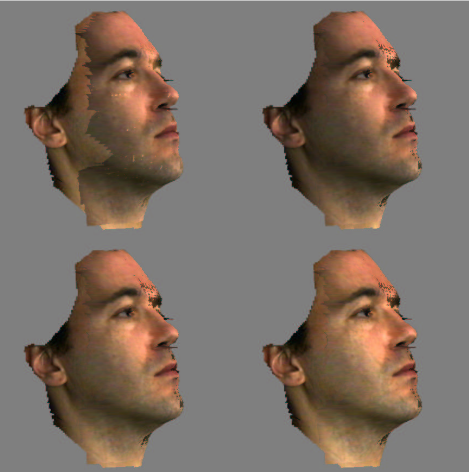

This thesis concentrates on two aspects of three-dimensional face recognition: the correction of colors for several 3D scans and the process of face recognition by 3D alignment. When doing an acquisition with a structured-light scanner, we obtain the 3D shape of an object and its texture. In the cases where we cannot acquire all the region of interest in a single scan, we have to merge several scans. It is easy to merge several geometry parts when their relative position is known. However, it is harder to merge the textures of these parts, when they have been acquired under different illuminations or with different cameras, as is often the case for 3D structured light acquisition. The method we present allows to correct the textures so that they become compatible, by “relighting” them under a common illumination, in order to be merged later. The second part of the thesis concerns recognition from 3D faces. The goal is to recognize someone’s identity from a 3D scan of that person’s face and a 3D model data base. This “3D-3D” approach has been rarely seen in the litterature. The basic operation in the proposed approach is the calculation of a “distance” between two 3D models. To calculate it, we align the two models with a modified version of Iterative Closest Point (ICP), and then calculate their dissimilarity. This distance is then used to implement different face recognition scenarios.

This thesis concentrates on two aspects of three-dimensional face recognition: the correction of colors for several 3D scans and the process of face recognition by 3D alignment. When doing an acquisition with a structured-light scanner, we obtain the 3D shape of an object and its texture. In the cases where we cannot acquire all the region of interest in a single scan, we have to merge several scans. It is easy to merge several geometry parts when their relative position is known. However, it is harder to merge the textures of these parts, when they have been acquired under different illuminations or with different cameras, as is often the case for 3D structured light acquisition. The method we present allows to correct the textures so that they become compatible, by “relighting” them under a common illumination, in order to be merged later. The second part of the thesis concerns recognition from 3D faces. The goal is to recognize someone’s identity from a 3D scan of that person’s face and a 3D model data base. This “3D-3D” approach has been rarely seen in the litterature. The basic operation in the proposed approach is the calculation of a “distance” between two 3D models. To calculate it, we align the two models with a modified version of Iterative Closest Point (ICP), and then calculate their dissimilarity. This distance is then used to implement different face recognition scenarios.