Perception of blending in stereo motion panoramas

journal » tapmichael-langer , Sébastien Roy , Vincent Chapdelaine-Couture

Mots-clés: environnement-immersif , perception , stéréo

Date : 2012-07

Résumé

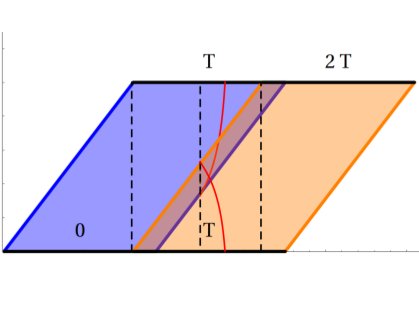

Most methods for synthesizing panoramas assume that the scene is static. A few methods have been proposed for synthesizing stereo or motion panoramas, but there has been little attempt to synthesize panoramas that have both stereo and motion. One faces several challenges in synthesizing stereo motion panoramas, for example, to ensure temporal synchronization between left and right views in each frame, to avoid spatial distortion of moving objects, and to continuously loop the video in time. We have recently developed a stereo motion panorama method that tries to address some of these challenges. The method blends space-time regions of a video XYT volume, such that the blending regions are distinct and translate over time. This paper presents a perception experiment that evaluates certain aspects of the method, namely how well observers can detect such blending regions. We measure detection time thresholds for different blending widths and for different scenes, and for monoscopic versus stereoscopic videos. Our results suggest that blending may be more effective in image regions that do not contain coherent moving objects that can be tracked over time. For example, we found moving water and partly transparent smoke were more effectively blended than swaying branches. We also found that performance in the task was roughly the same for mono versus stereo videos.

Most methods for synthesizing panoramas assume that the scene is static. A few methods have been proposed for synthesizing stereo or motion panoramas, but there has been little attempt to synthesize panoramas that have both stereo and motion. One faces several challenges in synthesizing stereo motion panoramas, for example, to ensure temporal synchronization between left and right views in each frame, to avoid spatial distortion of moving objects, and to continuously loop the video in time. We have recently developed a stereo motion panorama method that tries to address some of these challenges. The method blends space-time regions of a video XYT volume, such that the blending regions are distinct and translate over time. This paper presents a perception experiment that evaluates certain aspects of the method, namely how well observers can detect such blending regions. We measure detection time thresholds for different blending widths and for different scenes, and for monoscopic versus stereoscopic videos. Our results suggest that blending may be more effective in image regions that do not contain coherent moving objects that can be tracked over time. For example, we found moving water and partly transparent smoke were more effectively blended than swaying branches. We also found that performance in the task was roughly the same for mono versus stereo videos.