Omnistereo video textures without ghosting

conference » 3dvmichael-langer , Sébastien Roy , Vincent Chapdelaine-Couture

Mots-clés: graph-cut , omnistereo

Date : 2013-07

Résumé

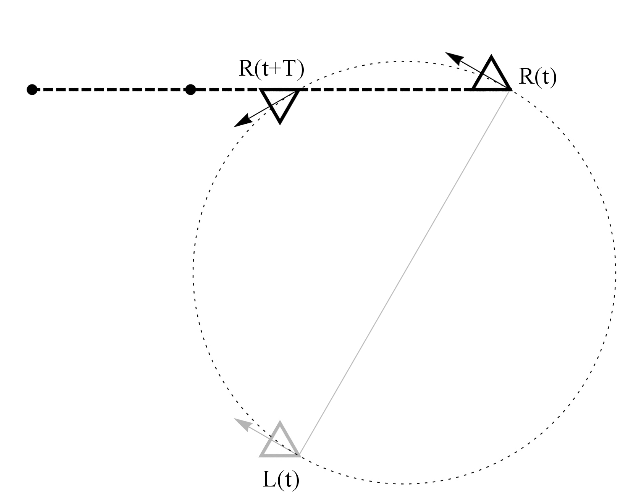

An omnistereo pair of images provides depth information from stereo up to 360 degrees around a central observer. A method for synthesizing omnistereo video textures was recently introduced which was based on blending of overlapping stereo videos that were filmed several seconds apart. While it produced loopable omnistereo videos that can be displayed up to 360 degrees around a viewer, ghosting was visible within blended overlaps. This paper presents a stereo stitching method to render these overlaps without any ghosting. The stitching method uses a graph-cut minimization. We show results for scenes with differents types of motion, such as water flows, leaves in the wind and moving people.

An omnistereo pair of images provides depth information from stereo up to 360 degrees around a central observer. A method for synthesizing omnistereo video textures was recently introduced which was based on blending of overlapping stereo videos that were filmed several seconds apart. While it produced loopable omnistereo videos that can be displayed up to 360 degrees around a viewer, ghosting was visible within blended overlaps. This paper presents a stereo stitching method to render these overlaps without any ghosting. The stitching method uses a graph-cut minimization. We show results for scenes with differents types of motion, such as water flows, leaves in the wind and moving people.